Multi Stakeholder Initiatives for Ethical Supply Chains: New Collaborations

Corporate Social Responsibility's Evolving Landscape

Corporate Social Responsibility (CSR) is no longer a niche concept relegated to the periphery of business operations. It's a critical factor influencing consumer decisions, attracting talent, and shaping a company's long-term sustainability. The evolving landscape of CSR demands a strategic approach, moving beyond simply fulfilling legal obligations to actively embracing ethical practices and contributing positively to society.

This shift is driven by a multitude of factors, including growing awareness of environmental issues, social inequalities, and ethical concerns. Consumers are increasingly demanding transparency and accountability from the brands they support, leading to a greater emphasis on ethical sourcing, fair labor practices, and environmental stewardship.

The Intersection of Business and Social Good

Businesses are realizing that their success is inextricably linked to the well-being of the communities they operate in. Companies that actively engage in social initiatives often experience enhanced brand reputation, increased employee engagement, and a stronger connection with their customer base. This intersection of business and social good creates a virtuous cycle, where positive social impact directly translates into positive business outcomes.

By addressing social and environmental challenges, companies can identify new opportunities for innovation, create shared value, and foster a more sustainable future for all.

Measuring and Reporting on CSR Initiatives

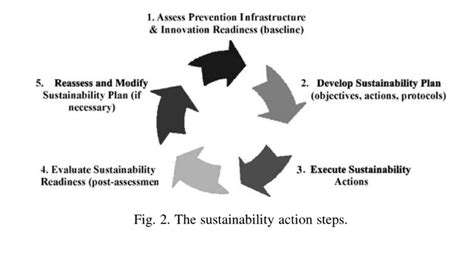

The effectiveness of CSR initiatives needs to be demonstrably measurable. This involves implementing robust systems for tracking and reporting on progress towards established goals. Quantifiable metrics allow companies to assess the impact of their efforts and demonstrate tangible value to stakeholders.

Transparency in reporting is crucial. Clear communication about CSR performance builds trust with consumers, investors, and employees. Detailed and accessible reports highlight the efforts made and the results achieved, fostering greater accountability and demonstrating a genuine commitment to social good.

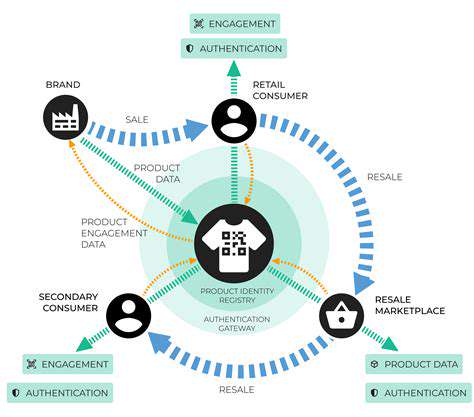

The Role of Technology in Enhancing CSR

Technological advancements are revolutionizing how companies can implement and measure their CSR strategies. Data analytics tools provide insights into environmental impact, social equity, and ethical labor practices, enabling more targeted and impactful interventions. These technologies help businesses identify areas for improvement and track progress in real time.

Digital platforms also facilitate engagement with stakeholders. Interactive platforms allow for direct communication and feedback, empowering consumers and communities to hold companies accountable for their actions and contribute to the development of more effective CSR strategies.

The Future of CSR and its Impact on Business

The future of CSR is one of continuous evolution and refinement. Companies are increasingly recognizing the importance of integrating CSR principles into their core business strategies, not as an add-on, but as an integral part of their operations. This integration will lead to more sustainable and responsible business practices, benefitting both businesses and society.

This evolution will further impact the way businesses operate, shaping supply chains, product development, and employee relations. By embracing a holistic approach to CSR, companies can foster a more ethical and sustainable global economy.

Innovating for Future-Proof Ethical Supply Chains

Ethical Considerations in AI Development

Artificial intelligence is rapidly transforming various aspects of our lives, from healthcare to finance. However, the development and deployment of AI systems must be guided by strong ethical principles to ensure fairness, transparency, and accountability. This necessitates careful consideration of potential biases in algorithms, the privacy implications of data collection, and the potential for misuse of AI technology.

We need to proactively address ethical concerns before they escalate into widespread societal problems. This proactive approach requires ongoing dialogue and collaboration between researchers, developers, policymakers, and the public.

Promoting Inclusivity and Diversity in AI

AI systems are trained on vast datasets, and if these datasets reflect existing societal biases, the resulting AI systems can perpetuate and even amplify these biases. Ensuring inclusivity and diversity in the development process is crucial to mitigating these biases and building more equitable and just AI systems.

This requires actively seeking diverse perspectives and experiences throughout the development lifecycle. From data collection to algorithm design, diverse teams can help identify and address potential biases, leading to more robust and reliable AI systems.

Fostering Transparency and Explainability in AI

Many AI systems, particularly deep learning models, operate as black boxes, making it difficult to understand how they arrive at their decisions. This lack of transparency can erode trust in AI systems and hinder their responsible deployment.

Developing AI systems with greater transparency and explainability is essential. This involves exploring methods for making AI decision-making processes more understandable and auditable, fostering trust and accountability.

Enhancing Data Security and Privacy

AI systems often rely on vast amounts of personal data, raising significant concerns about data security and privacy. Robust data protection measures are essential to safeguard sensitive information and prevent misuse. The ethical use of data is paramount for building public trust and ensuring responsible AI development.

Stringent data governance policies, coupled with technical safeguards, are vital to mitigate risks and maintain the integrity of personal data.

Addressing Potential Bias in AI Algorithms

AI algorithms can inadvertently reflect and amplify existing societal biases present in the data they are trained on. This can lead to unfair or discriminatory outcomes in various applications. Addressing these biases is crucial to ensure fairness and equity in AI systems.

Techniques for detecting and mitigating bias in algorithms, combined with ongoing monitoring and evaluation, are crucial to prevent harmful outcomes and build trust.

Ensuring Responsible Use of AI in Healthcare

AI has the potential to revolutionize healthcare by improving diagnostics, treatment planning, and drug discovery. However, ethical considerations are paramount in ensuring its responsible use in this critical sector. Carefully considering patient privacy, data security, and the potential for algorithmic bias is critical.

Establishing clear guidelines and regulatory frameworks for AI in healthcare is essential to ensure safety, efficacy, and equitable access for all.

Promoting Global Collaboration for Ethical AI

The development and deployment of AI are global challenges requiring international collaboration. Effective global cooperation is vital to establish common ethical standards and frameworks.

Sharing best practices, fostering dialogue, and coordinating efforts across nations are essential to ensuring the ethical and responsible development of AI for the benefit of all humanity.